LabVivant

The internet, social media, and virtual reality have revolutionized the way we connect with people globally, but they fall short when it comes to immersing oneself in strongly embodied experiences like practicing martial arts. Attempting to engage in such activities through a screen proves to be less effective and less fulfilling. Although motion capture technologies exist, they can be costly, intrusive, and require high-end computing power, rendering them incompatible with mobile devices.

Objective:

To develop a gesture controller for mobile VR that will enable online participants to engage in live martial-arts events through embodied interactions, including voice and movement.

Method:

I collaborated with a multidisciplinary team of experts in gamification, arts and technology, AI, virtual communities, 3D art, martial arts, and social sciences, while also working closely with our business partner Le Cercle and nonprofit partner Mitacs Inc. throughout the project.

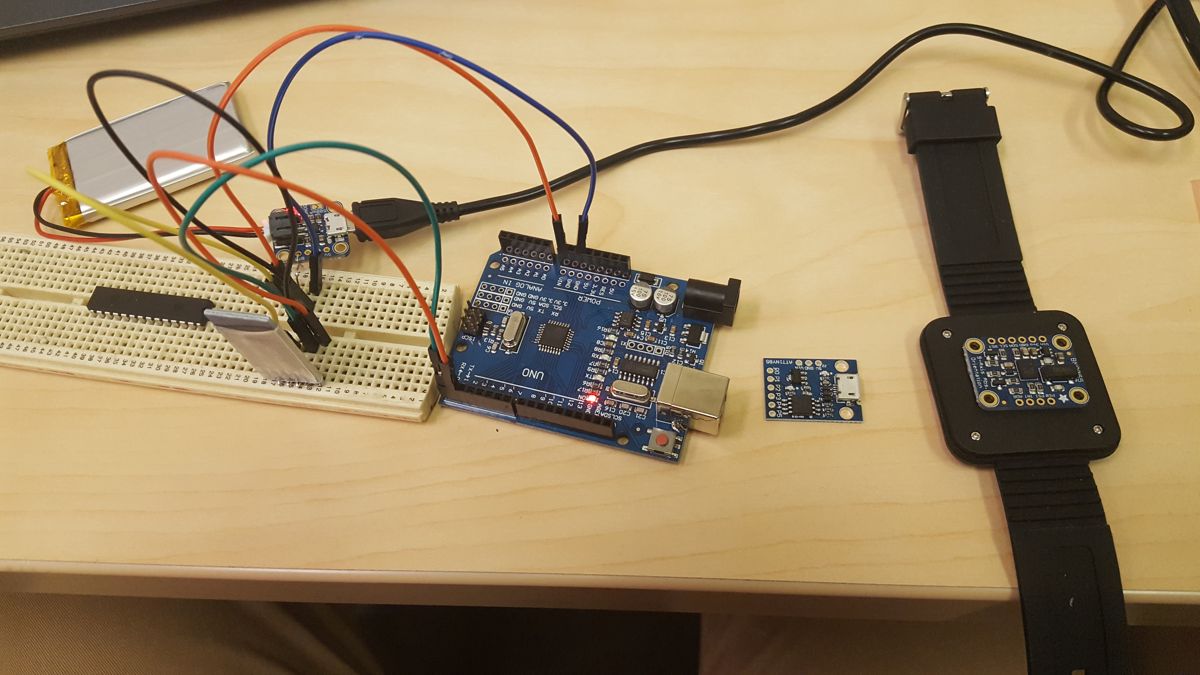

I developed a wearable system that captures user hand movements and voice using a 9 DOF IMU (Inertial Measurement Unit) and microphone.

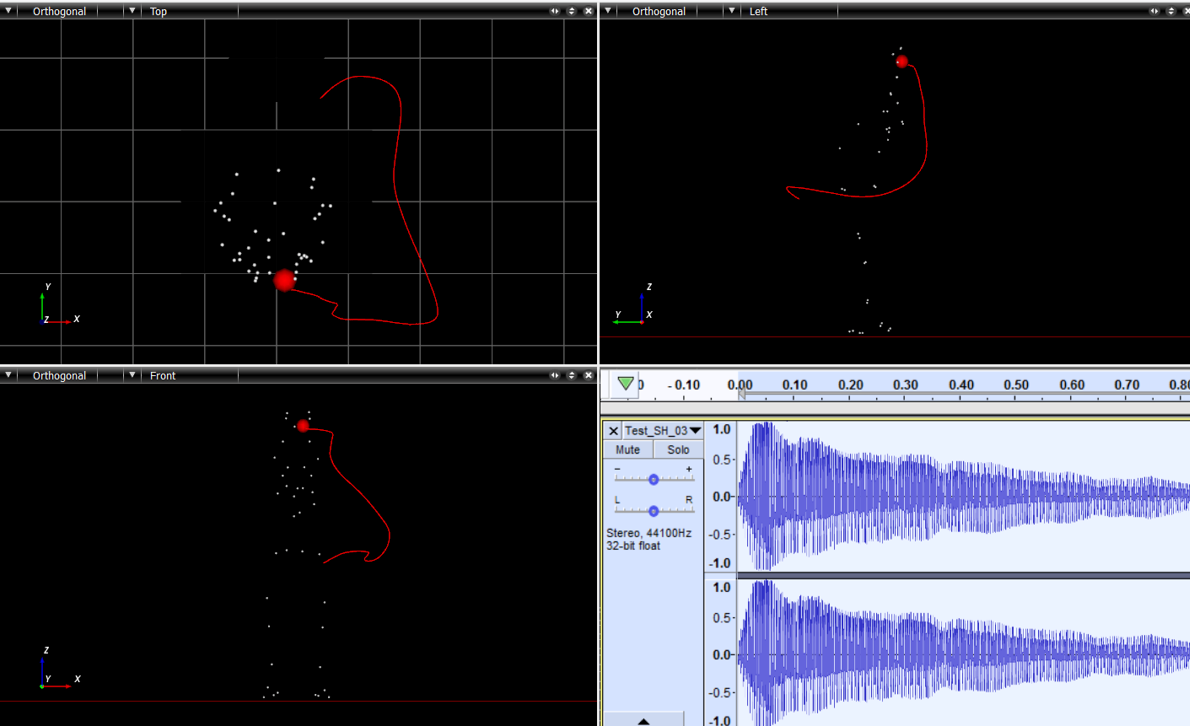

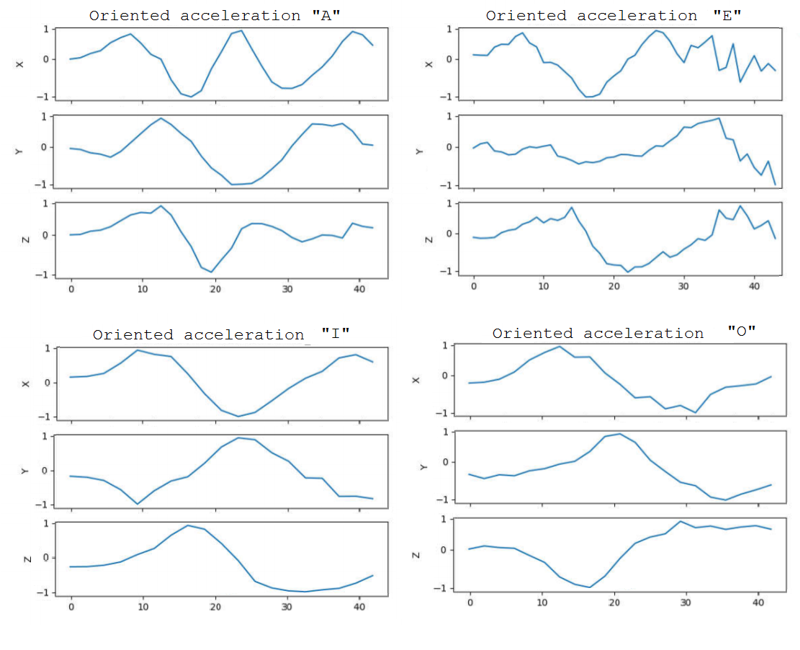

I processed IMU data by applying orientation quaternion to acceleration vector, estimating motion vector relative to Earth's magnetic field, and normalized the values for accurate representation of the same gesture despite user's speed and acceleration.

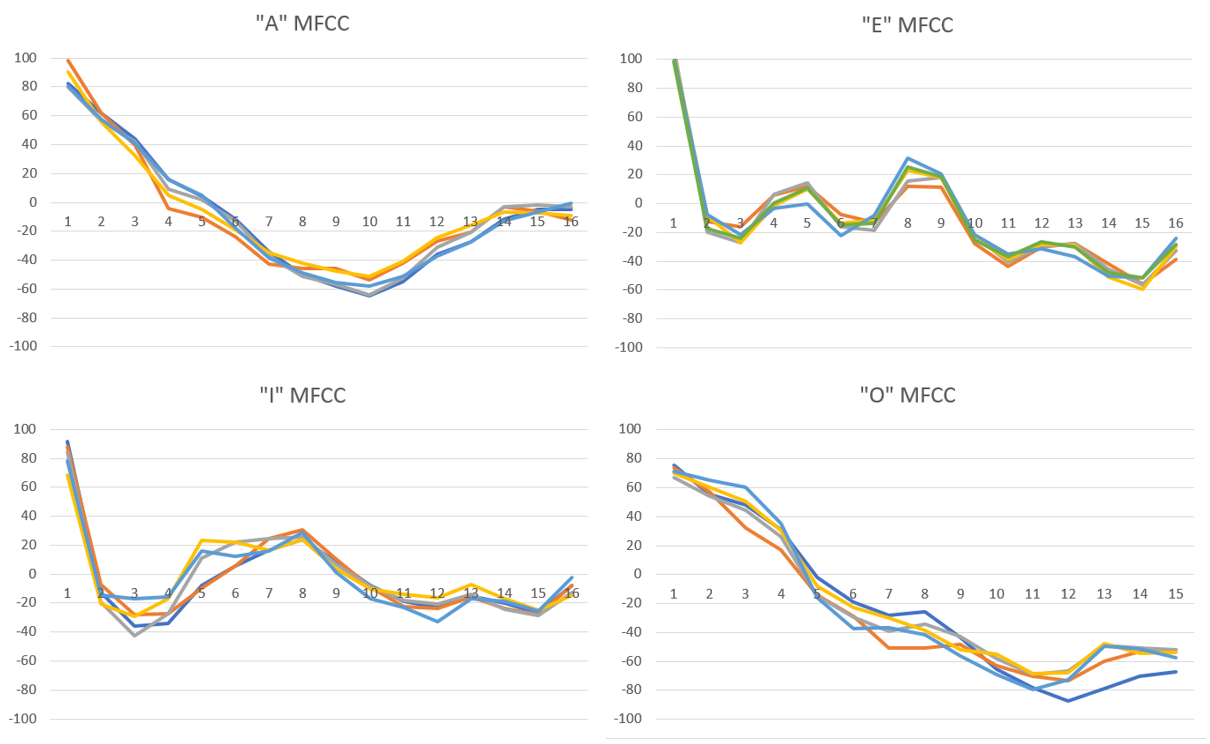

I developed an MFCC (Mel-frequency cepstral coefficients) algorithm that utilizes fast Fourier transform and discrete cosine transform to pre-process Shintaido phonemes.

During a motion capture session, I collected, preprocessed, and labeled data for five Shintaido motions and phonemes, which were performed by a Shintaido expert.

I trained two ANN models for classification with minimal architectures to enable real-time processing on low-powered mobile devices, although limited to a few gesture categories. The phoneme classification network had 16, 11, and 6 neurons while the motion classification network had 120, 80, 40, 20, and 6 neurons.

I developed a C# algorithm to natively load the architecture and weights of TensorFlow-trained neural networks in Unity3D.

I built a multiplayer "digital dojo" where users can interact through martial-arts movements and phonemes, and a VR version using Google Cardboard for increased immersion.

I authored a peer-reviewed research paper that was published and presented at a conference hosted by the Society for Imaging Science and Technology.

Tools:

Unity3D

C#

Google Cardboard

Arduino

Motion capture

TensorFlow

Results:

Developing wearable sensor system

Collection of training data

Gesture and phoneme data after preprocessing

Research articles

Juan Nino, Jocelyne Kiss, Geoffrey Edwards, Ernesto Morales, Sherezada Ochoa, Bruno Bernier, (2019) "Enhancing Mobile VR Immersion: A Multimodal System of Neural Networks Approach to an IMU Gesture Controller" in Proc. IS&T Int’l. Symp. on Electronic Imaging: The Engineering Reality of Virtual Reality, 2019, pp 184-1 - 184-6, https://doi.org/10.2352/ISSN.2470-1173.2019.2.ERVR-184